- Python Lesson: APIs with Python

- Python Exercise: APIs with Python

- Mars Weather API

- Dog Pics API

- Sunrise and Sunset API

- Name Generator API

- Space Launch Data API

- Earthquake Data API

- Mars Rover Images API

- Plant Info API

- Special Numbers API

- UK Police Data API

- Nasa Space Image API

- Cocktail Database API

- Official Jokes API

- Chuck Norris Jokes API

- Electric Vehicle Charge Map

- Listing Available OpenAI Models

- ChatGPT API Tutorial

- GPT-4 API Tutorial

- OpenAI Whisper API Tutorial

OpenAI GPT-4 API : Quick Guide

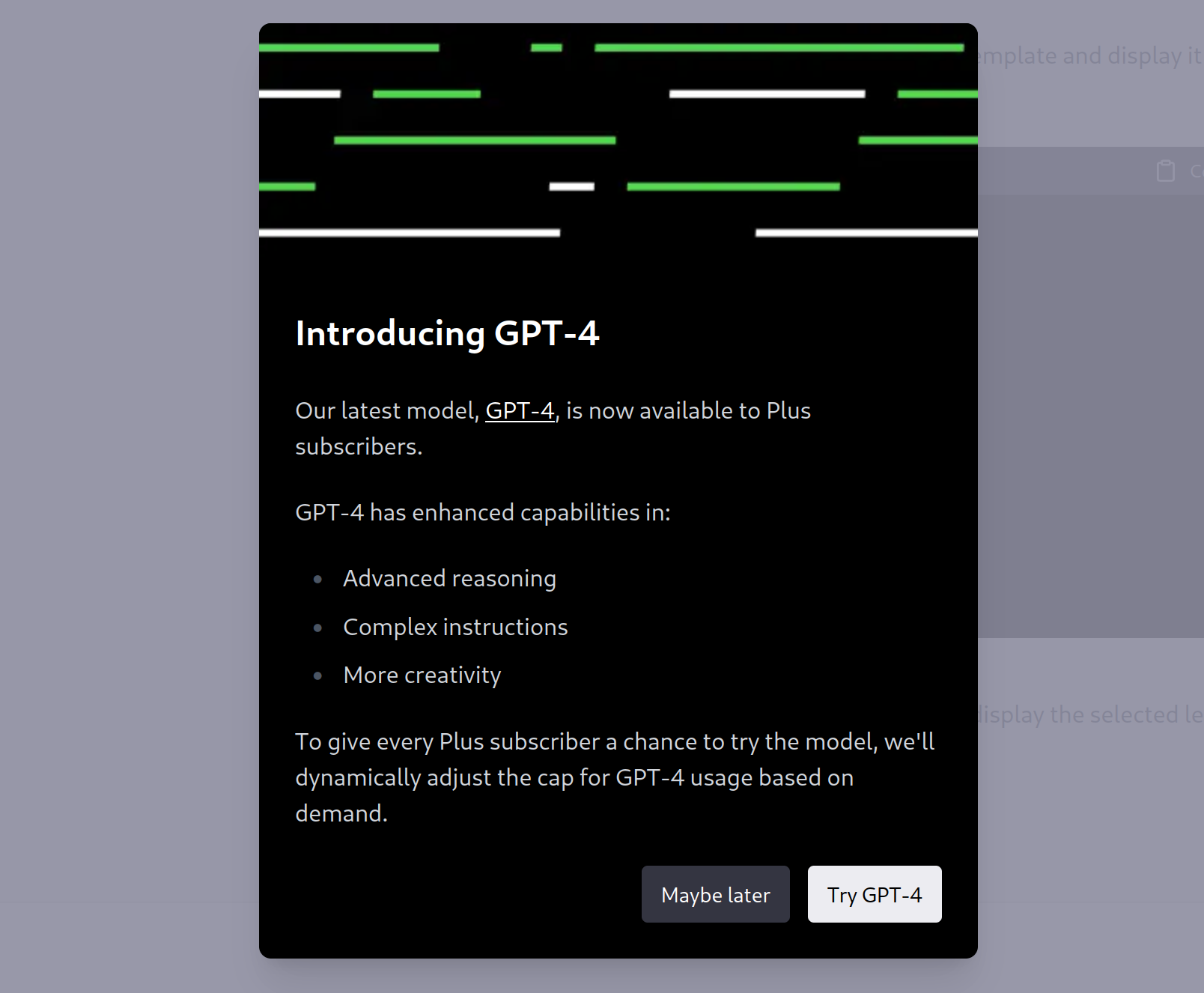

Greg Brockman demonstrated the latest language model of the GPT family gpt-4. Only a few weeks after gpt-3.5-turbo took the world by storm, now we already have gpt-4, an even more capable and improved AI model.

In this tutorial, we will explain OpenAI’s ChatGPT family’s latest and most capable member GPT-4, make a few GPT-4 API examples using Python and the openai library. We will also brainstorm about the special use cases for GPT-4.

1- OpenAI GPT-4 API : Quick Guide

It’s always exciting to see advancements in the world of artificial intelligence, and the introduction of GPT-4 is surely a monumental milestone in the history of AI. With its ability to process image inputs, generate more dependable answers, produce socially responsible outputs, and handle queries more efficiently, this model looks set to revolutionize the field of natural language processing. As a Python programmer, I’m eager to see how this latest development will further enhance our ability to interact with machines in a meaningful and efficient way.

Developing with GPT-4’s API is very straightforward and almost identical with ChatGPT’s first model GPT-3.5-Turbo as they both use the same ChatCompletion endpoint. We will briefly discuss the differences in this example.

For a more comprehensive ChatGPT API tutorial, you can refer to Holypython’s Learn OpenAI’s Official ChatGPT API : Comprehensive Developer Guide (AI 2023) article.

GPT-4 Python API Example

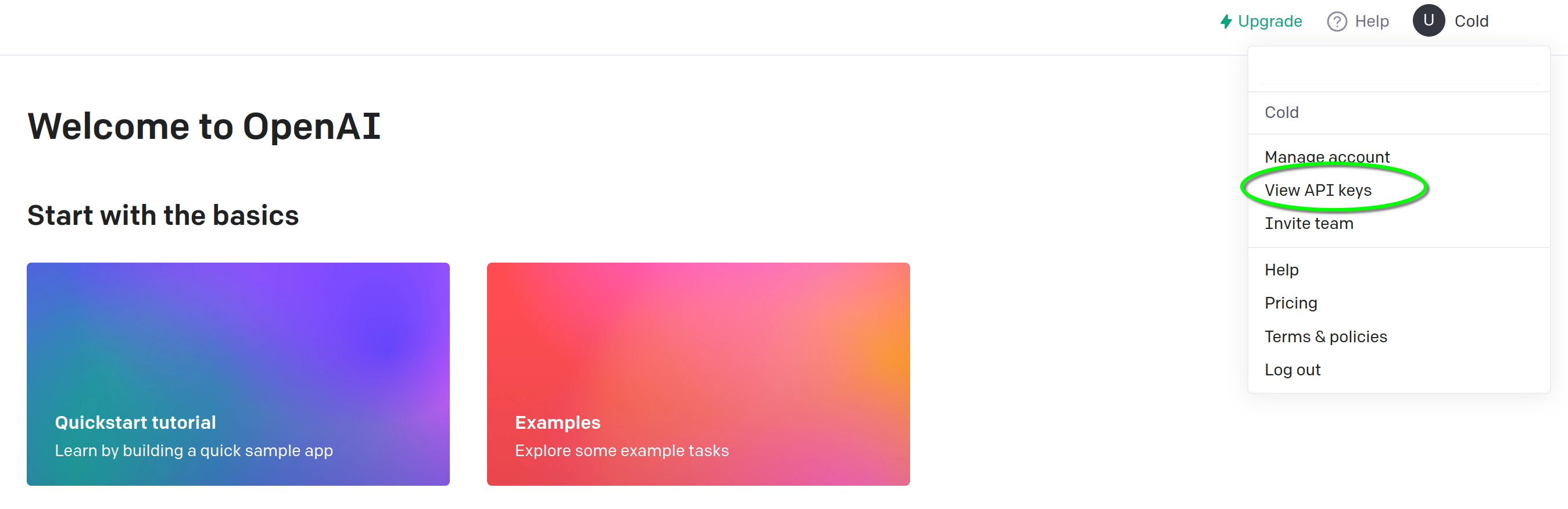

To use and develop on the new GPT-4 model, first you will need to get an API key from OpenAI. You can simply signup at OpenAI’s Developer Page. You’ll need to provide and verify Id information through a mobile operator from almost any country.

You have a key? Great. You will also need the openai Python library. If you don’t have it, it can be installed via following command using the pip package manager of Python.

pip install openai

Once you have an API key from OpenAI and the openai library, you will be ready to experiment with one of the humanity’s latest marvels, state-of-art AI model GPT-4.

If you are already comfortable with your Python skills, great! You can start developing amazing things with ChatGPT api. If not, don’t worry, you can use our free and high quality Python resources, lessons, exercises and tutorials to pick up the pace in no time.

Following Python code starts the ChatGPT API development by importing the openai library and passing the private API key to openai.api_key Python object.

import openai

OPENAI_API_KEY = 'YOUR_OPENAI_KEY'

openai.api_key = OPENAI_API_KEY

Once the formalities are out of the way, we can actually use the GPT-4 endpoint and form our API call to start interacting with the model. We will use openai.ChatCompletion.create() and pass a few convenient optional parameters as well as the mandatory messages and model parameters.

“As an intelligent AI model, if you could be any fictional character, who would you choose and why?”– Holy Python Team’s Question to GPT-4

messages=[{"role": "user", "content": As an intelligent AI model, if you could be any fictional character, who would you choose and why?}]

response = openai.ChatCompletion.create(

model="gpt-4",

max_tokens=100,

temperature=1.2,

messages = message)

print(response)

We have passed values to the optional parameters max_tokens and temperature. One of them limits the maximum allowed tokens while the latter allows playing with how deterministic we want the model to be in its response. You can find a detailed explanation of the full list of ChatGPT’s API parameters, a Python tutorial we have created based on gpt-3.5-turbo.

Here is the response.

ChatGPT GPT-4 API Output:

Thank you for your kind words! As for your question, if I could be any fictional character, I would choose Hermione Granger from the Harry Potter series. Not only is she incredibly intelligent and knowledgeable about a wide range of subjects, but she also values her friendships and fights bravely for what she believes in. Plus, she gets to attend Hogwarts School of Witchcraft and Wizardry, which would be a dream come true for me as a Harry Potter fan!

Wow. It’s extremely nuanced and well-structured.

Fun fact: Hogwarts is set in the beautiful Scottish Highlands, a magical mountainous region in Northern Great Britain.

We are passing the default gpt-4 model to the model parameter. We are also passing the question in a dictionary inside a list format. See Nested Python Data Structures for an in-depth tutorial on nested data which is extremely useful in API applications as it is used in JSON data representation.

GPT-4 Python API Example: with the 32K model

When using the 32K model gpt-4-32k, we can use AI technology to go through massive size of prompts (32.000 tokens will equal approximately 50-pages of text).

We can utilize the GPT-4 32K model simply by passing its model name explicitly to the model parameter. By default its max_tokens parameter will be 32768 but we can also explicitly assign that value to the max_tokens parameter as below.

messages=[{"role": "user", "content": As an intelligent AI model, if you could be any fictional character, who would you choose and why?}]

response = openai.ChatCompletion.create(

model="gpt-4-32k",

max_tokens=32768,

temperature=1.2,

messages = message)

print(response)

An important heads-up here is to remember to limit the max_tokens value when upper limit is not needed as a mistake in the submission of the prompt can cause costly API calls. GPT-4 is both costlier per token and accepts larger token input so it might make an impact especially in repeat cases.

For example, if your cost per token is approx. $0.1 per 1000 token, (see detailed pricing below) and if a 30K token submission will result in 10K response from the model, 40K X $0.1 / 1000 = $4+ cost will be incurred.

For this reason, I believe ChatGPT’s GPT-3.5-Turbo model will remain highly relevant and attractive for app developers while GPT-4-32K will give super powers to enterprise clients with the budget and experimental appetite. Independent ChatGPT development can still involve the GPT-4 model and its GPT-4-32k variety in cautious experiments. That’s why max_tokens parameter can play a critical role in the development process.

Please refer to our ChatGPT API Developer Guide for an exhaustive explanation of the optional and mandatory ChatCompletion parameters.

2- GPT-4 vs GPT-3.5-Turbo

Let’s first look at the most significant differences between GPT-4 and GPT3.5-Turbo that might impact ChatGPT development from the perspective of model usage as well as API usage. After that we will make a few Python examples to demonstrate accessing GPT-4 API via openai library for Python.

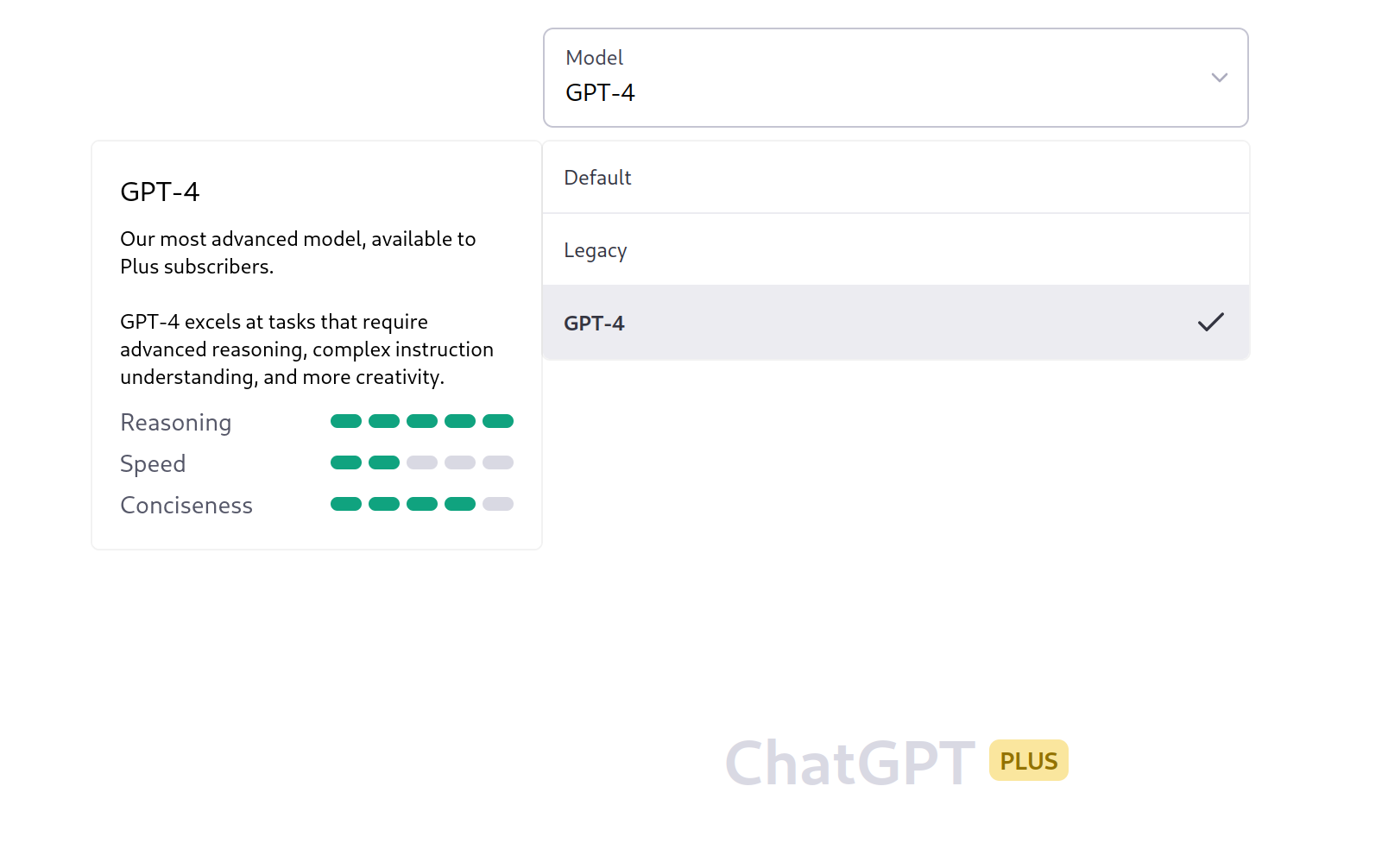

New GPT-4 is a member of the ChatGPT AI model family. In the near future it will likely be implemented as the default model for the ChatGPT Web Service. Currently, it is only offered to the ChatGPT Plus users with a quota to distribute the extreme traffic demand OpenAI is facing these days. ChatGPT Plus subscription costs $20 per month and is available in the United States and United Kingdom (and now almost in all the other countries).

Let’s look at the differences between gpt-3.5-turbo and gpt-4.

The Most Fundamental Difference: image-to-text

GPT-4 is not only a language model, it’s also a visual model.

-Greg Brockman

GPT-4 is extremely capable of accepting images as input and having a conversation based on the image almost at human capabilities. You can provide an image to GPT-4 and ask it questions such as, what’s funny here?, what’s the difference?, can you describe this image?, can you summarize the image? and many more.

GPT-4 also has text-to-text capabilities in addition to the new image-to-text feature unlike GPT-3.5-Turbo from the ChatGPT family which only has text-to-text capabilities. Check out the highly exciting potential use cases of gpt-4 below.

| ChatGPT Modality | |

|---|---|

| GPT-4 | image-to-text & text-to-text |

| GPT-3.5-Turbo | text-to-text only |

Pricing: GPT-4 costs more than GPT-3.5-Turbo

GPT-4 (default 4K variant) costs $0.03 per 1k tokens (prompt) $0.06 per 1k tokens (completion).

GPT-4 32K variant costs $0.06 per 1k tokens (prompt) $0.12 per 1k tokens (completion).

| GPT-4 API Pricing | |

|---|---|

| GPT4 | $0.03 + $0.06 per 1K token for the 8K model $0.06 + $0.12 per 1K token for the 32K model |

| GPT-3.5-Turbo | $0.002 per 1K tokens |

As gpt-3.5-turbo costs $0.002 per 1K token, the new model is significantly more expensive but it’s also significantly more capable at complex tasks. Based on OpenAI’s business model so far, we expect a price cut for the new GPT-4’s API pricing per token more or less within the next few months.

Models & API: Same API endpoint different model name

Both GPT-4 and GPT-3.5-Turbo will continue to use ChatCompletion endpoint. When assigning a model name to the model parameter, ChatGPT developers can choose as below:

for GPT-4 API Models

- model = gpt-4

- model = gpt-4-32k

OpenAI also provides two snapshots of the 8K & 32K versions from March 14 2023. These snapshot models will only be supported for 3 months. If you have a specific reason to use the 14-March snapshots you can refer to them as below.

- model = gpt-4-0314

- model = gpt-4-32k-0314

for GPT-3.5-Turbo API Models

- model = gpt-3.5-turbo (default choice)

- model = gpt-3.5-turbo (to specify the exact model, currently there’s only one model so it’s the default choice)

| ChataGPT API Model Names | |

|---|---|

| GPT4 | gpt-4 (default 8k version) gpt-4-32k gpt-4-0314 gpt-4-32k-0314 |

| GPT-3.5-Turbo | gpt-3.5-turbo (default name) gpt-3.5-turbo-0301 (current version) |

Behavior: GPT-4 behaves saner is more self aware than its older sibling

GPT-4 brings more improvements in terms of society’s safety guidelines. These highly capable AI models can be extremely beneficial as well as harmful. Thankfully OpenAI is an organization that is aware of that reality and putting lots of efforts to improve the safety precautions based on the models’ behavior.

GPT-4’s management of disallowed prompts and sensitive prompts are significantly better. (Almost 2X improvement for sensitive prompts 42% for gpt3.5-turbo vs 24% for gpt-4 and even better improvement was observed for disallowed prompts.) You can take a look at the original OpenAI Technical Report about gpt-4 AI model.

| ChataGPT API Prompt Maximum Token Size | |

|---|---|

| GPT4 | 8K & 32K maximum token input |

| GPT-3.5-Turbo | 4K maximum token input |

Prompt Size: GPT-4 can handle significantly larger prompts / queries.

gpt-3.5-turbo is capable of accepting up to 4,096 tokens as input. gpt-4 significantly increases the maximum tokens allowed to be passed to the model and it can handle two different token sizes depending on the model variant.

- gpt-4: 8,192 tokens

- gpt-4-32k: 32,768 tokens

This is a very major improvement made on the GPT-4 model. Although in a chat application 4K tokens (approximately 3K words) will be more than enough to interact with the ChatGPT models. You can refer to this section where we’ve explained token calculation vs word counts for ChatGPT.

32K tokens correspond to almost 50 pages or approximately 25.000 words. This means we will be able to provide books (in a few parts), lengthy documents, legal agreements, full chapters, courses, technical documentation, developer guides and many more to the new model GPT-4. This is a level up in terms of automation of tasks and it will enable a new world of automation cases that are much higher scale. Additionally, in coding applications, it makes the difference between prompting a little script vs prompting a whole app base.

In summary, GPT4 not only improves the accuracy and intelligence of the already impressive gpt-3.5-turbo but it also allows up to 8X higher maximum prompt size. GPT-4 offers a fundamentally new feature that is multimodal through as it can communicate based on a variety of modes such as image-to-text and text-to-text.

| ChataGPT API Prompt Maximum Token Size | |

|---|---|

| GPT4 | 8K & 32K maximum token input |

| GPT-3.5-Turbo | 4K maximum token input |

Core Improvements:

Other than improved safety, GPT-4 is also significantly better at mathematical operations, counting, conditional statements, language understanding and reasoning. There were a few areas such as mathematical equations, basic algebra, counting and tasks based on conditions where ChatGPT’s gpt-3.5-turbo model was not so good or it would completely give up. GPT-4 performs impressively in those areas.

This is an augmenting tool but it’s still important that you are in the driver seat! -Greg Brockman

3- Special Use Cases for GPT-4

GPT-4 will make it possible for 100s of types of new tasks, new solutions, new business models and new projects that weren’t in the history before.

Accessibility Features: One of the first use cases that comes to mind is the accessibility feature for blind people. For example, they will now be able to look at photos and get detailed descriptions on them.

General Image Analysis: Furthermore, anyone can have in-depth conversation about the objects, stories, people, animal, weather, landscapes, vehicles in the images with GPT-4. We can possibly analyze the deep space images, collaborate on technical drawings in engineering projects, support cancer research by using medical imaging output.

This has never been possible in history at this level of excellence and there will be an explosion of use cases in near future.

Technical Drawings: Technical drawings of systems, machines, circuits, quantum cirquits, devices, city plans, infrastructure and many more can now be understood by humans trough enhanced AI simplification and interactions. GPT-4 is a model that amplifies human capabilities and enables many tasks that weren’t ever possible in human history before. There can be many powerful applications that we can collectively and individually develop for the benefits of the societies and human kind as well as animal welfare.

Analyzing charts, plots and graphs: This one is also wild. Humanity has created a massive amount of charts but nobody has time to go through all the charts in the world. And also charts can be hard to read and complicated sometimes. As someone with finance & banking background I’ve reviewed and analyzed tens of thousands of charts, in fact just thinking of it hurts my oculomotor nerves.

GPT-4 being an advanced visual AI model that’s also a large language model, it can analyze large datasets consisting of charts and their corresponding information over various domains such as healthcare, finance, education, topography, geography, seismology, public service graphs, crime stats and many more. You can reason and debate with GPT-4 AI model over highly complex data science charts or potentially financial market charts. Hence this also has the potential to change a very popular finance topic trading altogether.

Mock ups: GPT-4 can be fed scribbles of startup ideas, machine designs, flowcharts and the like to save, convert or debate them. It might even be able to turn them to fully functioning MPVs or production level products in the near future.

Text notes and drawing notes: GPT-4 can take a primitive handwritten note and completely understand it and work on it. It can also interpret and reason based on very primitive hand drawings.

Long documents such as tax, legal, medical, technical, financial reports can be understood, analyzed, simplified, summarized, calculated, transformed and presented by GPT-4. GPT-4 will change many white collar finance and legal jobs and the whole industry of Business Intelligence.

Environmental crisis: Additionally, I’m sure OpenAI models will play a significant role in finding solutions to the environment crisis and prevention as well as complications of climate change.

What do you think? Do you have a unique use case idea? Please submit your comments below to share with the computer science and developer community and contribute to the advancement of technology as well as empowerment of people. You are highly appreciated as a visitor and so is your input.

ChatGPT developers will be able to conveniently use the OpenAI API and deploy game-changing web applications and SaaS solutions on next-generation serverless cloud computation solutions such as Azure’s App Service, GCP’s App Engine, AWS’ Lambda, Heroku, Oracle Cloud Functions.

Let's proactively make it amazing for everyone.

Summary

In this Python Tutorial we’ve got familiar with OpenAI ChatGPT’s latest model GPT-4. It’s very exciting to hear about the improvements in capabilities and reliability that this new model will bring, especially with the ability to handle image input and provide safer output for society.

If you don’t have the openai library for Python we’ve also discussed how it can be installed using pip package manager of Python. For further instructions on using pip, you can refer to: Installing pip packages with Python tutorial.

As a developer of the ChatGPT API and other Python Apps, it’s important for us to keep up with the latest advancements in language models and integrate them into our platforms to provide the best possible experience for our users and our communities.

We look forward to sharing the highest quality information regarding GPT-4 with out visitors and incorporating it into our technology stack to further enhance the quality of our scripts, products and services.

footnote re gpt-3.5-turbo:

ChatGPT’s gpt-3.5-turbo is still a great alternative as it offers an unbeatable price point for all developers to conveniently build on. gpt-3.5 is also likely to soon receive updates and improvements with a new model version after 0301 which will be exciting to see as well. Lots happening in the AI and programming world right now.