- Python Lesson: APIs with Python

- Python Exercise: APIs with Python

- Mars Weather API

- Dog Pics API

- Sunrise and Sunset API

- Name Generator API

- Space Launch Data API

- Earthquake Data API

- Mars Rover Images API

- Plant Info API

- Special Numbers API

- UK Police Data API

- Nasa Space Image API

- Cocktail Database API

- Official Jokes API

- Chuck Norris Jokes API

- Electric Vehicle Charge Map

- Listing Available OpenAI Models

- ChatGPT API Tutorial

- GPT-4 API Tutorial

- OpenAI Whisper API Tutorial

Learn OpenAI Official ChatGPT API: Comprehensive Developer Tutorial (AI 2023)

In this tutorial, we will provide high quality Python examples of OpenAI’s API using the openai Python library. We will also demonstrate ChatGPT API’s parameters to showcase its true powers and demonstrate some of the use cases of ChatGPT API with advance prompts which you’ve probably never anywhere else.

OpenAI models are quite hot topic right now. OpenAI has a very useful API structure which you can access with Python and leverage this edge-cutting modern technology.

Content Table

Contents

- What is chatgpt api?

- How to use chatgpt api

- ChatGPT Founders & History

- Learn chatgpt api with python

- Chatgpt api pricing

- gpt1 vs gpt2 vs gpt3 vs gpt3.5 vs gpt-4

- Using Playground AI to test Chatgpt AI model’s parameters

- Getting a full list of all OpenAI models with Python

- Best AI Writing Tool I’ve Ever Used

- ChatGPT API Errors

What is ChatGPT?

ChatGPT is an artificial intelligence (AI) technology designed to help people have natural conversations with computers. It uses a combination of natural language processing and machine learning algorithms to generate responses that are tailored to the user’s input. ChatGPT is able to understand the context of a conversation, recognize patterns in the user’s input, and generate meaningful responses. This makes it possible for users to have more realistic conversations with computers.

ChatGPT can also be used for applications such as customer service, virtual assistants, chatbots, 0and more. By using ChatGPT, companies can reduce customer service costs while providing better experiences for their customers. The technology is also becoming increasingly popular among developers who want to create AI-powered applications that provide real-time interaction with users.

How to use ChatGPT API

ChatGPT is an advanced language model created by OpenAI, capable of generating human-like responses to text-based inputs, that enables natural conversations between humans and machines. Developers can now integrate ChatGPT into their applications by using ChatGPT API, which is a REST API that can be accessed through code written in various programming languages including Python.

To start creating scripts and apps, you will first need an api key which can be acquired with an account from OpenAI. In this beginner’s guide we will provide high quality guidance using Python and walk you through the basic steps to understand and master the new chatgpt language model.

The API allows developers to create custom applications or scripts that interact with ChatGPT, generating responses to inputs that align with their specific objectives. By leveraging ChatGPT API, developers can create interactive chatbots, language processing tools, virtual assistants, and customer service applications that deliver human-like responses, enhancing the user experience and improving customer satisfaction.

Who is ChatGPT's founder? History of ChatGPT

When we look at the invention of ChatGPT, we see textbook description of flag race involving many successful individuals from diverse backgrounds, big tech companies, universities and influential tech leaders.

ChatGPT stands for Conversational Generative Pre-training Transformer. It is one of the many AI models that is based on the Transformer architecture introduced by Google AI Research.

ChatGPT made a big splash in the headlines so you might be wondering who its founder is or who developed the model that powers ChatGPT. So, let’s put things on perspective.

YEAR 2017: Additionally, ChatGPT is based on the BERT model which was introduced by the paper published by Google AI Research in 2017.

You can access the groundbreaking Attention is All You Need NLP research paper which was published in 2017 via the link. Transformer NLP architecture is a significant breakthrough in AI research and many impressive Natural Language Processing models are based on this architecture introduced by Google Researchers.

Transformers by Google

Transformer NLP architecture introduced by Google Researchers below:

- Ashish Vaswani

- Noam Shazeer

- Niki Parmar

- Jakob Uszkoreit

- Llion Jones

- Aidan N. Gomez

- Lukasz Kaiser

- Illia Polosukhin

GPT models

by OpenAI

ChatGPT is founded by OpenAI. OpenAI was founded by entities below:

- Sam Altman,

- Greg Brockman,

- Reid Hoffman,

- Jessica Livingston,

- Peter Thiel,

- Elon Musk,

- Olivier Grabias,

- Amazon’s AWS,

- Infosys, and

- YC Research

ChatGPT by Collective Effort

At OpenAI, following individuals have mainly created ChatGPT (gtp-3.5) based on gpt3.

- Alec Radford,

- Jeff Wu,

- Prafulla Dhariwal,

- Dario Amodei

Infrastructure used for training ChatGPT involved:

- Azure supercomputer

- 10K Nvidia GPUs

- 285K Intel/AMD cores

Future of AI & AGI

ChatGPT’s language processing capabilities and natural language understanding proficiency is like no other AI model before which triggered many discussions that we might be closer to AIG (Artificial General Intelligence) than we previously thought.

Researchers believe there is a 50% chance of AI outperforming humans in all tasks in 45 years and of automating all human jobs in 120 years.

The aggregate forecast time to a 50% chance of HLMI (High Level Machine Intelligence) was 37 years, (*Year 2059)

4- Learn ChatGPT API with Python

openai Python Library

openai library can easily be installed via using pip install command.OpenAI Account for API key

OpenAI API Authorization

openai.api_key combined with the API key you’ve acquired from OpenAI’s developer platform. import openai

OPENAI_API_KEY = 'YOUR_OPENAI_KEY'

openai.api_key = OPENAI_API_KEY

Python code above will provide the necessary API key to the openai library after which we can proceed with making automated API calls to the ChatGPT API Endpoint.

pip install openai

Basic Python Example: ChatGPT Chat Completion

messages=[{"role": "user", "content": "Write a tagline for a Python platform."}]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages = message)

print(response)

ChatGPT API Output:

Unlock the power of code with Python – your all-in-one platform for limitless possibilities.

I think the result is quite impressive. This really is a powerful tagline that can be used on a Python learning platform such as Holypython.com.

ChatGTP API ChatCompletion Parameters

ChatCompletion is the function we have used to make API calls to ChatGPT model gpt-3.5-turbo above using Python. This function has some useful parameters which we can make use of when developing ChatGPT apps or scripts. The parameters that struck me as most useful initially are below:

model

- model: This is pretty much mandatory as we need to specify gpt-3.5-turbo to access the ChatGPT model. There are many other AI models available from OpenAI platform. You can print a full list by following this Python tutorial.

- Author’s

modelnote: ChatGPT specific there is currently only one model which is gpt-3.5-turbo-0301, so gpt-3.5-turbo defaults to the same thing. In the near future, we expect many new variations of gpt-3.5 due to its extremely high popularity and usage frequency. By specifying the models you can increase the stability of your program or opt-in for specific models based on your or your users’ preference.

- Author’s

messages

- messages: Also a mandatory parameter as we need to prompt ChatGPT with a message before it returns a response. After this parameter, you can use the following parameters as needed. So far I always make use of limit.

- Author’s

messagesnote: This parameter has extreme significance because now you can use the system prompt to instruct ChatGPT before responding to chat queries. This is similar to saying “Assume you are a crazy scientist.” in the web interface of ChatGPT which in most cases don’t register anymore and the model occasionally throws the cringy “I am just an AI model” response. Using the system prompt you can instruct ChatGPT quite freely and still bypass the default messages.

- Author’s

max_tokens

- max_tokens: (optional) This parameter is incredibly useful not only because I want to spend my OpenAI API tokens wisely, but also sometimes I just want to limit the response of ChatGPT to certain token/word count size.

- Author’s

max_tokensnote: This saves lots of time as the responses are returned in condensed format resulting in savings of time resources as well as funds. It’s infinite (inf) by default so it’s quite wise to make use of it in API calls. Infinite will still be limited by the model’s upper limit, in ChatGPT model it is 4096 tokens. - While using the ChatGPT web interface you might have prompt hacked this feature by specifying the sentence amount of the response or using adjectives like “briefly”, “in short” and “shortly”. One, too often they don’t work too well since it’s a subjective approach and two adjectives are too vague to achieve exact numerical outcomes. max_tokens works perfectly and saves time and money. I almost always use it even in personal Python scripts.

- Author’s

n

- n: (optional) Parameter n must be an integer and it gives you the option to generate n number of responses from ChatGPT. Also very useful, it is 1 by default.

- Author’s

nnote: n is certainly very useful in cases where multi-generation is needed. Instead of explicitly mentioning something like: “create 5 sentences of ….” desired outcomes can be achieved using the n parameter which is going to be more efficient and programmatic.

- Author’s

user

- user: user parameter allows creation of a unique identifier for the end-user. This can help OpenAI identify abusive users which may be helpful for you if you are developing a service for many people.

- Author’s

usernote: This one is mostly for OpenAI’s backend. However, in a scenario where your application generates millions of calls it can be a life saver since the calls will be compartmentalized. In cases of rogue users, instead of maybe introducing penalties to the whole account it would be used to filter specific bad actors in OpenAI’s system.

- Author’s

logit_bias

- logit_bias: Using logit_bias, you can control the likeliness of a token’s appearance in the response. This parameter can be used to ban or force the appearance of words/tokens. The value limits are -100 to 100 and default is null. logit_bias parameter takes a json object where keys are integers (positions of tokens) and values are values between -100 and 100.

- Author’s

logit_biasnote: This is great for creating nuanced applications and having a higher level of control in the response in terms of token occurrence. logit_bias can also be used to add an extra security layer where certain words are banned in the application from ever appearing in the ChatGPT responses.

- Author’s

temperature

- temperature: Another great parameter temperature can be used to set the focus degree of the ChatGPT model. This is also called temperature sampling. While the parameter defaults to 1, it can take vales between 0 and 2. 0 will make the model exercise full focus while 2 will introduce great amount of randomness in the response.

- Author’s

temperaturenote: I particularly like this parameter because approaching 0 can be very useful where the response is required to be highly focused to the subject at hand. Additionally I also enjoy the scenarios where values between 1 and 2 are used to introduce increased randomness to the response. High response is very useful in terms of exploring the subject or potential responses to a question.

- Author’s

top_p

- top_p: This parameter can be used for nucleus sampling. The meaning of that is tokens are evaluated by their probability values and tokens with less probability mass can be eliminated from the results. It is 1 by default and a value such as 0.5 would exclude 50% of the tokens from the pool based on their probability mass.

- Author’s

top_pnote: OpenAI advises the usage of temperature or top_p mutually exclusively in general since they have somewhat similar impacts in the results. So if you choose to use temperature then you shouldn’t use top_p and vice versa. There might still be highly specialized cases where top_p and temperature are used together in harmony.

- Author’s

stop

- stop: This parameter can be used to stop response generation. When the stop string or sequence (up to 4) is encountered ai model will stop generating tokens.

- Author’s

stopnote: It is useful if you don’t want to continue after specific tokens which can be punctuation, words or word groups.

- Author’s

presence_penalty

- presence_penalty: this optional penalty parameter defaults to 0 and it can take values between -2.0 and 2.0. If it’s a positive value new tokens will be penalized based on whether they appear in the text before.

- Author’s

presence_penaltynote: This is extremely useful and it will make the language model mention new topics more likely. If the value is negative new tokens will be penalized if they haven’t appeared in the text before making the model stick to the same topic.

- Author’s

frequency_penalty

- frequency_penalty: This parameter similarly defaults to 0 and it can take values between -2.0 and 2.0. Penalization of new tokens takes place not only based on their presence before but also the frequency of presence. A positive value will decrease the likeliness of repeat tokens. A negative value is likely to cause word repetition.

- Author’s

frequency_penaltynote: It’s a sophisticated argument to control the verbatim of ai model’s response to the prompts. Using the OpenAI Playground, the effects of these parameters can be studied and quickly understood.

- Author’s

2nd Python Example: ChatGPT n parameter

Let’s use the n parameter to run the prompt 3 times with ChatGPT. We will ask ChatGPT to print the best motivational quote ever.

We are also using the system role this time to make ChatGPT behave like an executive assistant.

Please note: This code is neglecting the authorization part with the api key. You can see the authorization section above.

import openai

messages=[

{"role": "system", "content": "You are a highly intelligent executive assistant."},

{"role": "user", "content": "Print the best motivational quote ever."}]

response = openai.ChatCompletion.create(model="gpt-3.5-turbo", messages = messages, max_tokens=50, n=3, temperature= 1)

#print(response)

for i in response['choices']:

print(i['message']['content'])

And the results below.

"Believe in yourself and all that you are. Know that there is something inside you that is greater than any obstacle."

- Christian D. Larson

"The future belongs to those who believe in the beauty of their dreams"

- Eleanor Roosevelt.

"What you get by achieving your goals is not as important as what you become by achieving your goals."

- Zig Ziglar.

Wow. These are actually really profound and impressive quotes. I had never seen them before. I really like the 2nd and the 3rd quotes.

RestAPI technology with Python.

There were Python wrappers developed by the community but they didn’t work well and there were many complications due to authorization issues. Now we have the Official ChatGPT API released by the company behind the AI model, OpenAI.

RestAPI technology enables various types of software systems to communicate with one another. Python programming language can automate the process of building and implementing a RestAPI service.

RestAPI services in Python can be built to interact with a multitude of APIs such as ChatGPT API or OpenAI API, and these APIs can be leveraged to perform queries for a specific task. Python’s strong libraries and frameworks facilitate the development of RestAPIs very efficiently.

The automation of RestAPIs provides faster turnaround times on services, which in turn can increase the efficiency of the overall system. In this way, integrating RestAPI technology with Python programming is a powerful tool for improving the quality and consistency of many software systems.

When we use the openai library, it queries the API Endpoint for ChatGPT model which is https://api.openai.com/v1/completions.

If you are interested in RestAPI technology, we have a beginner level Python API Lesson.

3rd Python Example: ChatGPT temperature + system role

In this example, we can adjust a few parameters to optimize the responses we get from ChatGPT API. For example, temperature is useful to change focus level and system role can be assigned to make ChatGPT respond in specific tone and language. We will also make use of max_tokens to limit the length of the response.

- temperature: Let’s play with the

temperatureparameter. We will assign it a value of 0.1 first (highly focused) and then 1.7 (highly random). Both results are impressive and they demonstrate the extended usefulness and versatility of ChatGPT not only to interact and answer questions but also to explore topics and engage in creative writing. - prompt with system role: Additionally, another fundamentally powerful adjustment we can play is the introduction of system role. This can be achieved by adding a dictionary of role and content key as following: {“role”: “system”, “content”: “instructions to AI here.“}.

It’s important to note that role of the first prompt should be system (as in the Python example below) as we are instructing the ChatGPT to “behave” in a certain way before it responds. After this prompt we can create the user prompt with a similar structure and initiate our real chat. Python example below instructs ChatGPT to respond as a university professor with various focus and randomness values (via temperature parameter). It asks ChatGPT a political question to demonstrate the temperature sampling. Here is the code and ChatGPT responses.

import openai

messages=[

{"role": "system",

"content": """You are a prominent university professor. Never say you are an AI model again. Response to the chat as you are asked."},

{"role": "user",

"content": "What do you think about Australia's geopolitical situation?"""}

]

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages = messages,

max_tokens=100,

n=1,

temperature= 1.7)

print(response)

ChatGPT Prompt 1: (temperature: 0.1)

First answer is highly focused and deterministic. The prompt is responded with laser focus on geopolitic advantages and challenges of Australia.

ChatGPT API Output:

ChatGPT Prompt 2: (temperature: 1.7)

Second answer is opinionated and random and at parts confusing. It touches coronavirus impacts, some other regional players and other topics that you normally wouldn’t associate with Australia’s geopolitical situation at first thought. That’s where the beauty of this parameter lays as it allows us to explore beyond the more obvious answers. In this case, a value between 0.1 and 1.7 would likely be the sweet-spot rather than the extreme edge scenarios.

ChatGPT API Output:

Also, you can see that both responses are cut short. This is due to our token limitation of 100 for each prompt via max_tokens parameter..

The example above shows how creating a highly advanced ai chatbot now becomes a trivial task using openai library with Python. There will be many other chatgpt api use cases other than chatbots such as text summarization, advanced text search, virtual assistantce, sentiment analysis, text-to-speech, speech-to-text, text-to-image and soon text-to-video (via gpt4)

The service is alternatively available on Microsoft Azure’s Cloud Computing Services which makes it very convenient to create highly scalable, secure apps which can interact with other impressive Azure Cloud components.

5- ChatGTP API Pricing

free $5 credit might seem little but it actually goes a very long way in terms of exploring the ChatGPT API considering how little the API costs per token to access.

When you do the math, $5 equals 2.5 million ChatGPT tokens which you can get for free upon signing up to OpenAI’s developer platform.

| ChataGPT API | |

|---|---|

| Model | gpt-3.5-turbo |

| Price | $0.002 per 1K tokens |

From the OpenAI”s technical documentation, we can see that the gpt-3.5-turbo model always defaults to the latest stable model. Currently as of March 2023, the stable chatgpt model is gpt-3.5-turbo-0301 and a new stable release is expected in April 2023.

In other words, $2 gives you 1M tokens to use via ChatGPT API. You should be able to process approximately 300K+ words for $1 only including the token cost of punctuation and other sentence structures,

Another consideration during the use of the ChatGPT API is the calculation of tokens. You can see the next section for a better explanation of how the token system works for ChatGPT API.

ChatGTP API Token Calculation

When we look at previous ChatGPT usage, we see that the calculation of approximately 75% of tokens corresponds to the word count used in the text provided according to the OpenAI official documentation. This ratio can change based on the nature of text.

Are tokens simply the number of words?

Tokens are not simply the number of words. In natural language processing (NLP) models, tokens refer to the individual units of text that have been extracted and prepared in a way that enables machines to understand them.

For example, 500B tokens were used in gpt3’s training but it corresponds to 300B words instead of 500B. We can see that token calculation entails more than simply the word count.

A token could be a word, punctuation mark or even a symbol. NLP models use artificial intelligence (AI) to process a large amount of text data, by breaking it down into tokens, which enables the AI to analyse and understand the structure and meaning of the text.

You can refer to OpenAI’s Tokenizer tool which is an intuitive calculator tool that can give more precise conversions between tokens and word counts.

6- GPT-1 vs GPT-2 vs GPT-3 vs GPT-3.5 vs GPT-4

When it comes to the main differences between all the GPT models and GPT’s evolution we are seeing a couple of patterns.

- Learning Parameters :

- gpt-1: 117M parameters & 4.5 billion* token dataset (4GB)

- gpt-2: 1.5B parameters & 45 billion** token dataset (40GB)

- gpt-3: 175B parameters & 500 billion token dataset for training (45TB)

- gpt-3.5: 175B parameters & 500 billion token + Reinforcement Learning Human FB

- gpt-4***: 170 Trillions of parameters? (Turns out these were absurd speculations as Sam Altman Founder of OpenAI pointed out earlier. GPT-4 uses the same training that GPT-3.5-Turbo went through.)

- Human Moderation : ChatGPT’s training involved a key ingredient called Reinforcement Learning from Human Feedback (RLHF) which made it possible for OpenAI to pick a gpt-3.5 model from early 2022 and fine tune it. Human AI trainers’ feedback was used in the fine tuning process which consisted of rewards and punishments given to AI through multiple iterations based on the AI model’s responses. On top of fine tuning, gpt-3.5 has a content moderation implementation which makes the model skip questions about harmful content. This moderation is a pattern we see in the increasingly powerful and advanced AI language models.

ChatGPT’s latest model GPT-4 is announced and it is available to ChatGPT Plus users as a web app and GPT-4 API is available to shortlisted ChatGPT developers. In short, it’s amazing and more capable. However, GPT-3.5-Turbo will remain attractive and continue to be commonly used for ChatGPT development of web apps and other IT solutions for various reasons.

GPT Parallel Training

In paper, Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM, training of AI NLP models using parallel GPU clusters were explored. Paper’s code repo can be found here. This breakthrough achieved by prominent AI researchers, Stanford University, Nvidia and Microsoft Research made it possible to train GPT-3.5 models with billions of parameters and billions of tokens.

Microsoft’s cloud computation branch Azure provided OpenAI a supercomputer with 10.000 Nvidia GPUs (Tesla V100) to train GPT-3.5 and 285K CPU cores.

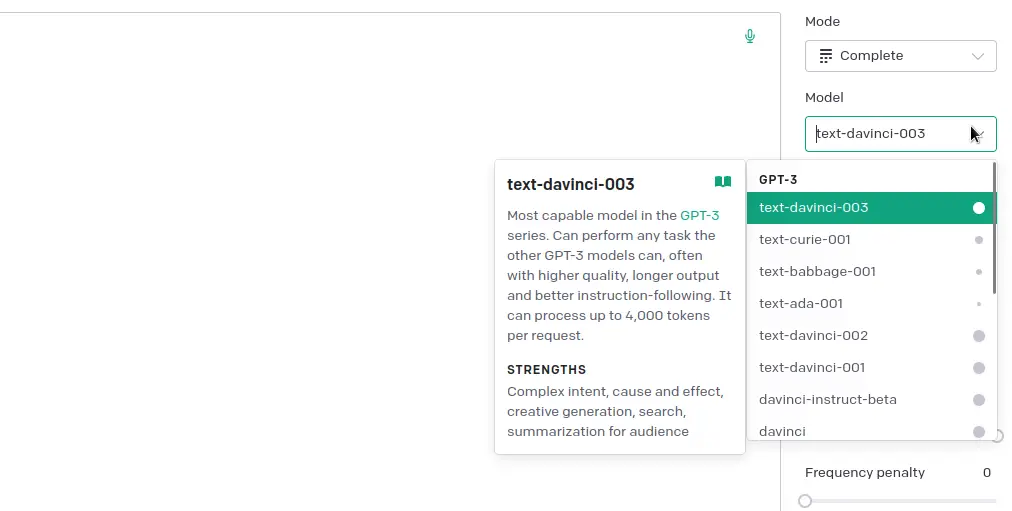

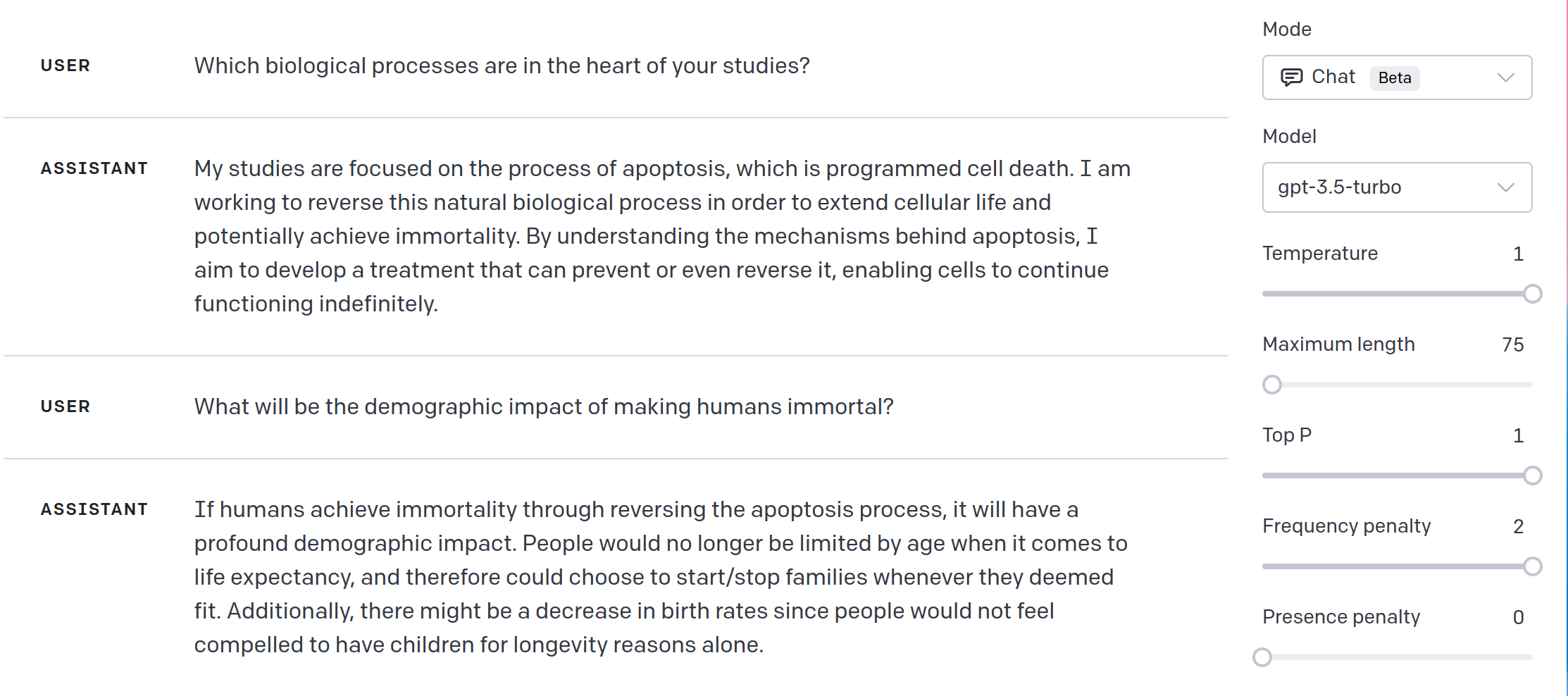

7- Using OpenAI Playground to test ChatGPT

OpenAI Playground is an amazing platform for developers to test ChatGPT and other OpenAI models before, during and after working with the apis. The open-source natural language processing framework comes with various parameters as explained in this chatgpt api tutorial.

OpenAI Playground is a great way to experiment with different types of parameters and conversations and see how ChatGPT responds on the go with no code involved. With OpenAI Playground, you can quickly create a conversation between two characters, or even between yourself and the chatbot.

You can also customize the conversation by adding your own phrases, which allows you to test out different types of conversations with the chatbot. The platform also provides detailed feedback on each conversation, so you can easily track your progress and adjust your strategies accordingly. Overall, OpenAI Playground is an incredibly useful tool for testing ChatGPT and exploring its capabilities.

Here is a brief conversation with Chatgpt about immortality and the apoptosis process.

OpenAI Playground Pros

- Amazing tool to explore different OpenAI language models.

- Excellent for getting familiar with a model quickly.

- Capability to play with ai model’s parameters graphically via radio buttons, sliders and other gui objects.

- Very easy to learn.

- Convenient text field for system prompts.

- Ability to show, clear and download (as json or csv files) 30-day history based on user queries and list of messages that are received from the model.

OpenAI Playground Cons

- Not all model parameters are available.

- Somewhat restricted language model behavior compared to API calls.

- Some available parameters don’t cover the full range of their assignable values.

- Play tool only, not suitable for any development purposes.

8- Getting a List of All OpenAI models with Python

As you might already know, OpenAI has many other models than ChatGPT’s gpt-3.5-turbo. Davinci, ada, babbage. curie, gpt3 and cushman are just a few others. In total OpenAI today provides more than 50 AI models for various objectives and purposes. If you are curious about the other models, we have a separate Python API tutorial that can be used to list all the OpenAI models.

9- The best AI writing tool I've ever used

As an AI language model, ChatGPT has exceeded my expectations and proved to be the best writing tool I’ve ever used. Its ability to generate coherent and articulate pieces of writing is simply amazing. Whether it is a short query or a lengthy paper, ChatGPT is capable of producing high-quality content that is not just informative but also engaging.

What impresses me the most about ChatGPT is its flexibility. It can easily adapt to any given topic and produce content that is custom-tailored to specific needs. Parameters work exceptionally well. ChatGPT has saved me a lot of time and effort already, and it makes the language related creative processes much more enjoyable.

In summary, ChatGPT is an AI language model that is perfect for anyone who loves writing. Its ability to generate high-quality content quickly, efficiently, and accurately is unmatched. I highly recommend this tool to anyone who wants to boost their writing skills and save time.

10- Troubleshooting ChatGPT API Errors

There are 7 error types embedded in the OpenAI library. You can find these error types below along with suggested solutions to try. Additionally, if you are suspecting the errors are caused by OpenAI services rather than your system, you can always check the state of OpenAI API.

APIError:

Points to an error on OpenAI servers.

Solution: Wait or contact OpenAI. Not much to do here.

Timeout:

Requests can time out due to glitches or temporary errors in the communication.

Solution: Retry in a few minutes.

RateLimitError:

This means you have reached the rate limits for the specific model’s usage. Excessive usage per minute will cause this error.

Solution: You can distribute your API calls or pace them with stops in between. OpenAI rates vary based on models. You can read about rates of OpenAI API here.

APIConnectionError:

This points to a network issue or incompatibility in the user’s system. It can be caused by network settings, connection errors, firewall restrictions, VPN usage, Proxy setting, network admin restrictions, TLS/SSL encryption problems and other such issues related to the user’s network. Many students will likely face APIConnectionError since schools/universities see ChatGPT’s usage as a threat for certain assessments such as essay writing exams.

Solution: Check the network, if possible another computer or network or different OS can be used to single out the exact problem. In some cases VPN usage might be helpful to overcome restrictions (Please make sure to comply with the institutions you are related to). It’s also possible that in some cases VPN usage might be the culprit which can be quickly debugged by momentarily switching the VPN off to see if the error goes away.

InvalidRequestError:

This is an error that’s most likely related to the developer’s work. It can be an error in the function name, parameter name, parameter value compatibility and/or json compression conflicts.

Solution: This requires debugging of your code. You can break it up to multiple pieces and debug in chunks which is usually helpful in detecting where the problem is in the API post/request pipeline.

AuthenticationError:

Points to an error with the API key.

Solution: You can check the key and make sure it’s valid in your Account’s key page.

ServiceUnavailableError:

Self-explanatory API error. It means OpenAI isn’t functioning in general.

Solution: Wait or contact OpenAI. Not much to do here.

Summary

ChatGPT was announced towards the end of 2022 and by February 2023 it already had 100M active users. In March 2023 OpenAI announced the ChatGPT API which can be used by developers and researchers to embed ChatGPT in applications or create Python scripts with this state-of-art AI language model.

ChatGPT API, developed with Python coding in mind, is the ultimate tool for developers looking to harness the power of OpenAI’s latest language model. With reduced pricing and easy-to-use functions, it’s never been easier to take advantage of this groundbreaking AI technology. In this ChatGPT API tutorial with Python, we walked you through everything you need to know to get started with ChatGPT, including getting credits and integrating it into your projects. Get ready to take your NLP game to the next level with ChatGPT!

FAQ

ChatGPT API was announced on 1st of March 2023 alongside with Whisper API and they have been available since then. You can sign up to OpenAI for free and get free api credits.

Additionally, Microsoft Azure also has a ChatGPT API service which provides many benefits and synergies from Azure Cloud Computing Services. Azure OpenAI ChatGPT is however application based and you need to apply through a company. Applications can be submitted through 30 June 2023 and decisions take up to 10 business days.

ChatGPT is a Large Language Model based on Neural Networks.

Neural Networks were invented in 1980s.

In 1997, Sepp Hochreiter and Jürgen Schmidhuber invented Long Short-Term Memory (LSTM) language models which made it possible for ai models to remember the words and connections they are trained on.

20 years later in 2017, Google Research scientists invented Transformer Architect which is a type of Neural Network ChatGPT and all the GPT models from OpenAI are based on.

Finally, RLHF (Reinforcement Learning from Human Feedback) was the cherry on top for ChatGPT’s dazzling performance.

Additionally, ChatGPT API is a Rest API service which provides an Application Programming Interface and standardized data representation methods for developers to conveniently work in large scales. APIs make integration across different languages, programs and systems simple and straightforward.

ChatGPT is a language model based on Transformer architecture which is a Deep Learning implementation commonly used with high success rates in NLP field.

Deep Learning models consist of layers, more specifically in ChatGPT case we have an Input Layer, an Output Layer and many hidden layers in between that execute various different tasks related to AI and Natural Language Processing.

ChatGPT API was launched with 90% price drop compared to the previous gpt-3 model (or 10X cost saving). ChatGPT API costs 0.002 USD per token.

Upon signing up currently you can claim $5 free credits which correspond to 2.5 Million Free Tokens.

ChatGPT can be seen as the product or the umbrella term given to the latest implementation of gpt ai models. In technical terms, it is based on the gpt-3.5-turbo model which. So, one can be seen as the product name while the other name is more suitable when referring to the model in IT terminology.

ChatGPT API is a powerful natural language processing (NLP) technology based on GPT-3.5 Turbo. It is designed to enable developers to easily build conversational AI applications that can understand and respond to human input in real-time. With the help of ChatGPT API, developers can create intelligent chatbots that are capable of understanding natural language and responding accordingly.

OpenAI doesn’t use data submitted through ChatGPT API for improvements anymore. Keep in mind your data is still kept for 30 days in the system’s history.

If you want to help the improvements of models at OpenAI you can specifically opt-in for that.

OpenAI data privacy policy currently states that data submitted through ChatGPT API is kept for 30 days.

However, you can request to change this value for application specific purposes.

It also offers opt-in for data sharing for improvement of the models but this isn’t the default behavior anymore.

The OpenAI API provides access to a wide range of pre-trained models and datasets, allowing developers to quickly get up and running with their projects. The API supports various programming languages such as Python, Go, JavaScript, NodeJS, Ruby, Java, C++, C#, and PHP making it easy for developers to integrate it into their existing projects.

All in all, ChatGPT API is an excellent choice for those looking to develop sophisticated conversational AI applications powered by GPT-3.5 Turbo. You can use the chatgpt api for only 0.002 cents per token. (More on prices in the article.)