History of Speech-to-Text Models & Current Infrastructure

As speech technology continues to advance, so too do the models that power it. From early traditional algorithms to modern machine learning models, the field has come a long way in a relatively short amount of time. Especially today, we are experiencing breakthroughs and paradigm shifts with the rise of neural networks and applications based on the Transformer architecture.

In this article, we will take a deep dive into the history of AI models in speech-to-text and explore how these models have evolved over time, bringing us closer to achieving human-level accuracy in this critical technology. Additionally we will briefly cover the current infrastructures that redefine almost all industries through innovative implementation of AI models on computational cloud services.

Contents

History of Speech-to-Text Models

We can see several scientific and technological milestones as well as accelerated innovation in the short history of speech-to-text models since 1950s. We’ll take a closer look at the exciting developments that occurred in this field throughout the decades.

Pre-2000

The history of speech-to-text (STT) dates back to the early 1950s, when researchers first began to explore the use of computers to recognize speech. Here is a brief timeline of some of the key developments in the history of STT:

1952:

Researchers at Bell Laboratories developed the “Audrey” system, which could recognize single-digit spoken numbers.

1960s:

The advent of digital signal processing (DSP) and the development of algorithms for pattern recognition led to significant progress in speech recognition research.

1970s:

The “Hidden Markov Model” (HMM) was developed, which became a popular approach for speech recognition. HMMs use statistical models to represent the probability of speech sounds and transitions between them. “Markov Models for Pattern Recognition: From Theory to Applications” by Lawrence R. Rabiner, published in 1989 was particularly influential. In this paper, Rabiner described the use of Hidden Markov Models (HMMs) for automatic speech recognition (ASR), which revolutionized the field of speech recognition and has remained a cornerstone approach ever since. His work contributed significantly to the development of practical ASR systems that were accurate and efficient, and paved the way for future research in this area. Here is a technical tutorial on HMM and Selected Applications in Speech Recognition.

1980s: The “Dynamic Time Warping” algorithm was developed, which could recognize speech in noisy environments. Professor Romain Tavenard from Université Rennes 2 has a great article regarding Dynamic Time Warping and its algorithmic foundation. Professor Tavenard also has an open source Dynamic Time Warping repo where source code for applications of tslearn (time series machine learning) with Python can be found along with many other interesting repositories such as K-means clustering demonstrations. Tslearn is a go-to Python library for implementing DTW with time series.

1990s: The development of neural networks and machine learning algorithms led to significant progress in speech recognition. Large vocabulary speech recognition systems also became more widely available.

Early Millenia

2000s:

The development of deep learning techniques in general, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), led to significant improvements in speech recognition accuracy.

2010s:

The advent of big data and cloud computing led to the development of more powerful speech recognition systems that could process vast amounts of data. Consumer applications of speech recognition, such as virtual assistants and voice-controlled devices, also became more widely available.

Following research papers have been highly influential in advancing the field of speech-to-text technology using RNN and CNN.

- “Convolutional Neural Networks for Speech Recognition” by O. Abdel-Hamid et al. published in 2012.

- “Improving neural networks by preventing co-adaptation of feature detectors” by G. Hinton et al. published in 2012.

- “Speech Recognition with Deep Recurrent Neural Networks” by A. Graves et al. published in 2013.

- “Deep Speech: Scaling up end-to-end speech recognition” by A. Hannun et al. published in 2014.

- “Very Deep Convolutional Networks for Large-Scale Speech Recognition” by A. Senior et al. published in 2015.

Post 2015

2015:

Listen, Attend and Spell (LAS): Developed by Carnegie Mellon University in 2015, LAS is a neural network model for speech recognition that uses an encoder-decoder architecture with an attention mechanism. It is designed to be highly accurate and can handle noisy speech data.

Deep Speech 2: Developed by Baidu in 2015, Deep Speech 2 is a neural network model for speech recognition that uses a deep learning architecture based on convolutional and recurrent neural networks. It achieves state-of-the-art performance on several speech recognition benchmarks.

2017:

MobileNet: Developed by Google in 2017, MobileNet is a neural network model for speech recognition that uses a lightweight architecture optimized for mobile devices. It is designed to be fast and efficient, making it well-suited for real-time speech recognition applications on mobile devices.

2019:

Speech Recognition Transformer (SRT): Developed by Microsoft in 2019, SRT is a neural network model for speech recognition that uses a transformer architecture with a hybrid CTC/attention decoding mechanism. It achieves state-of-the-art performance on several speech recognition benchmarks.

RNN-T: Developed by Google in 2019, RNN-T is a neural network model for speech recognition that uses a recurrent neural network (RNN) transducer architecture. It is designed to be highly efficient and can handle long sequences of speech data.

Large Scale Multilingual Speech Recognition with Streaming E2E: Developed by Google in 2019, the Streaming End to End Models are neural networks for real time speech recognition that use end to end architecture with a streaming attention mechanism. It is designed to be highly efficient and can handle long sequences of speech data in real-time.

2020:

2020: Speech-XLNet: Developed by the University of Science and Technology of China in 2020, Speech-XLNet is a neural network model for speech recognition that uses a transformer architecture with a novel permutation-based pre-training approach. It achieves state-of-the-art performance on several speech recognition benchmarks.

2021:

Speech Recognition with Augmented Contextual Representation (SCAR): Developed by Google in 2021, SCAR is a neural network model for speech recognition that uses a transformer architecture with an augmented contextual representation mechanism. It achieves state-of-the-art performance on several speech recognition benchmarks.

2022:

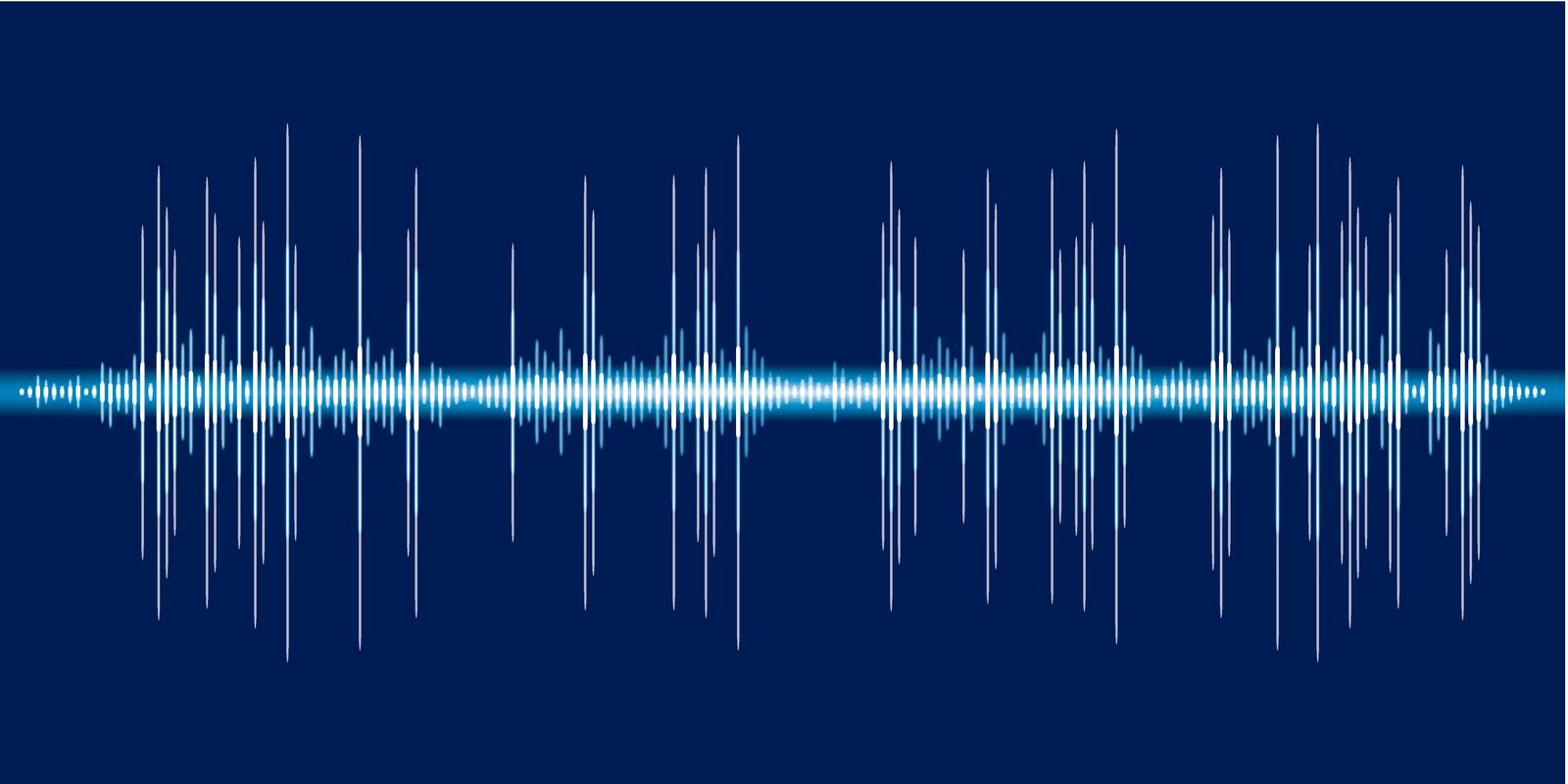

OpanAI published and open sourced Whisper speech-to-text models. Open source whisper models; tiny, small, medium, large, large-v2, tiny.en, base.en, small.en and medium.en are available via models open source Whisper Github Repository.

Open AI Whisper

2023:

Whisper API: Whisper-1 is available via OpenAI’s API on its developer platform along with ChatGPT API. You can see this tutorial of detailed explanation and developer guide for Whisper API services. Whisper is based on the AI architecture introduced by Google named transformer.

The architecture’s main strength is its ability to take into account the dependencies among different parts of the speech input when generating the text output. This approach is more effective than traditional methods for processing such as convolutional neural networks, as it is less dependent on the order in which the input is sequenced.

2024 and beyond:

With the recent advancements in AI technologies, we can expect to use speech to interact with multimodal AI models which have the potential to become precursors of Artificial General Intelligence.

Today, speech recognition technology is mostly a solved problem with AI models accurately being able to transcribe and translate almost 100 languages of our world.

Speech-to-text is used in a wide variety of applications, from virtual assistants and auto-translators to automated transcription services. Ongoing research in machine learning and artificial intelligence is likely to continue to drive progress in the field of speech-to-text in the coming years but we also expect to see many developments in multimodal AI applications and possibly, eventually AGI.

OpenAI models are quite the hot topic right now. OpenAI has a very useful API structure which you can access with Python and leverage this edge-cutting modern technology.

In this tutorial, we will demonstrate a few examples of OpenAI’s API using Python and the openai library.

Speech-to-Text on Cloud

“Speech-To-Text on Cloud” refers to the process of generating text from spoken words using cloud computing services. In other words, users can speak into a microphone or phone, and their words are automatically transcribed into text format by leveraging cloud-based tools and resources. This approach is becoming increasingly popular due to its convenience, scalability, and affordability, as it eliminates the need for on-premise hardware and software installations. Cloud providers offer Speech-To-Text services that use algorithms derived from machine learning and natural language processing to convert audio recordings to text accurately, quickly, and with high fidelity.

OpenAI Whisper API:

Whisper API has recently been made available and it might provide an ideal infrastructure to interact with OpenAI’s popular AI service ChatGPT. Whisper speech-to-text API costs $0.006 per 1 minute audio so it is quite affordable and given the experiences OpenAI recently had with scaling and optimizing their systems, Whisper should provide a flawless experience. AI developers can sign up and get FREE credits to test their API services and the free credits go a very long way in terms of testing and demonstrations.

Google Cloud Speech-to-Text:

Google Cloud Speech-to-Text is a powerful cloud-based speech recognition service that can transcribe audio into text with high accuracy. It can recognize speech in over 120 languages and variants, and supports various audio formats such as FLAC, WAV, and MP3. It also supports various features such as real-time streaming and automatic punctuation, and can handle noisy backgrounds and different speaking styles. However, Google’s premium services can be a bit expensive especially if you process a very large volume of data.

Google Speech-to-Text uses deep learning algorithms to convert audio to text. It supports multiple languages and can transcribe audio from various sources, including video files, streaming audio, meeting recordings and telephone calls.

Microsoft Azure Cognitive Services Speech Services:

Microsoft Azure Speech Services is a cloud-based speech recognition service that offers both speech-to-text and text-to-speech capabilities. It supports various languages and accents, and offers features such as real-time streaming, custom models, and automatic language detection. It also integrates with other Azure services such as Azure Cognitive Services and Azure Machine Learning. However, it can be a bit complicated to set up and configure compared to other services.

Amazon Transcribe:

Amazon Transcribe is a cloud-based speech recognition service that can convert speech to text in real-time. It supports various audio and video formats, and can recognize speech in multiple languages and accents. It also offers features such as automatic punctuation, speaker identification, and custom vocabularies. However, it doesn’t support streaming transcription, and the accuracy can be affected by background noise or poor audio quality.

IBM Watson Speech to Text:

IBM Watson Speech to Text is a cloud-based speech recognition service that supports various languages and dialects. It can convert speech to text with high accuracy and offers features such as speaker diarization, real-time streaming, and custom models. It also integrates with other IBM Watson services such as Watson Studio and Watson Assistant. However, it can be expensive to use if you process a large volume of data.

Oracle Cloud Infrastructure Speech-to-Text:

OCI Speech is a cloud-based speech recognition service that uses deep learning algorithms to convert audio to text. It supports multiple languages and can transcribe audio from various sources, including video files, streaming audio, and telephone calls. It also offers real-time transcription and keyword spotting capabilities.

Speechmatics:

Speechmatics is a cloud-based speech recognition service headquartered in Cambridge, England. The company uses deep learning algorithms to convert audio to text. It supports multiple languages and can transcribe audio from various sources, including video files, streaming audio, and telephone calls. They are highly specialized in speech technologies.

Otter.ai:

Otter.ai is a cloud-based speech recognition web app that uses deep learning algorithms to convert audio to text. It supports multiple languages and can transcribe audio from various sources, including video files, streaming audio, and telephone calls. They have a free tier for individual to try users as well as pro and business tiers. A very modern user interface and with free tier option you can’t really lose much by trying if it seems like an interesting service to you. They provide services for Business and Education applications. Otter.ai is well positioned for the current AI transformation that’s happening in the world right now and their integrated voice assistants can help you save time and get more done.

Other Speech-to-Text Alternatives:

Rev.ai: Rev.ai is a cloud-based speech recognition service that uses deep learning algorithms to convert audio to text. It supports multiple languages and can transcribe audio from various sources, including video files, streaming audio, and telephone calls. Rev.ai has a free trial tier for individual users and also great use case scenarios so you can have a pleasant experience and learn about speech-to-text without even paying anything. That being said their processing cost per minute for audio might need reconsideration after the recent developments such as Whisper API they might have to reconsider their pricing for transcription services.

Speech Recognition Anywhere: Speech Recognition Anywhere is an interesting Chrome extension which can be used for filling forms and other documents and even Google Docs and Spreadsheets. Mostly suitable for individual use cases.

Voicebase: Voicebase is a cloud-based speech recognition service that uses deep learning algorithms to convert audio to text. It supports multiple languages and can transcribe audio from various sources, including video files, streaming audio, and telephone calls. It also offers keyword spotting, entity extraction, and sentiment analysis capabilities. They have specialized services for telecommunication, financial services, healthcare and other industries suitable for enterprise solutions.

Kaldi Speech Recognition Toolkit: Kaldi is an open-source speech recognition toolkit that can be deployed on the cloud. It uses deep learning algorithms to convert audio to text and supports multiple languages. It also offers speaker recognition and language understanding capabilities.

Pros and Cons of Speech-to-text Cloud Services

Pros:

All of these speech-to-text cloud services offer high accuracy and support various languages and accents. They also offer various features such as real-time streaming, speaker identification, and custom vocabularies. Additionally, they can easily integrate with other cloud services and offer developer-friendly APIs and SDKs that can be used with Python and other modern programming languages.

Cons:

However, some of these services can be expensive, especially if you process a large volume of data. Also, the accuracy can be affected by background noise or poor audio quality, and some services such as Amazon Transcribe don’t support streaming transcription. Finally, some services such as Microsoft Azure Speech Services can be a bit complicated to set up and configure compared to other options.

Summary

We have provided a comprehensive overview of the evolution of speech recognition technology in our article titled “History of Speech-to-Text AI Models”. We trace the early beginnings of speech recognition research to the 1950s and the development of the first commercial speech recognition systems in the 1980s.

Our article then delved into the major milestones of the last two decades and development of more sophisticated speech-to-text models, from early statistical models to the latest deep learning architectures. We highlighted some of the key advancements that have enabled such progress, such as the availability of large speech corpora and advances in graphics processing units (GPUs).

We emphasize the importance of responsible AI as the significant impact of major breakthroughs in machine learning start to have visible effects in our society. Introduction of the transformer architecture started a new paradigm in AI since early 2020. If there is any message we can conclude from the history of speech-to-text AI models and the accelerated growth in innovative applications in this domain, it is that there will be a lot of impactful development in near term and long term.